Charpentier, Bertrand, and Thomas Bonald. 2019. “Tree Sampling Divergence: An Information-Theoretic Metric for Hierarchical Graph Clustering.” In Proceedings of the 28th International Joint Conference on Artificial Intelligence, 2067–73.

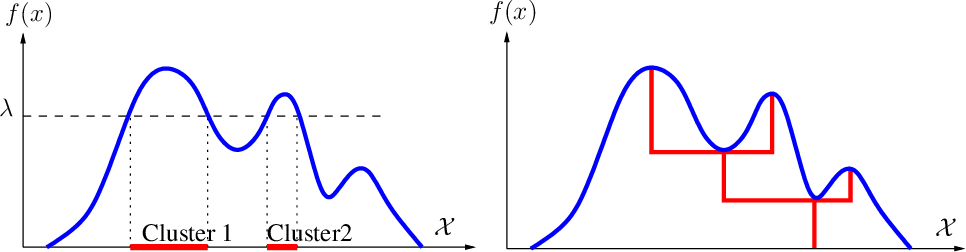

Chaudhuri, Kamalika, and Sanjoy Dasgupta. 2010. “Rates of Convergence for the Cluster Tree.” In Proceedings of the 23rd International Conference on Neural Information Processing Systems-Volume 1, 343–51.

Cohen-Addad, Vincent, Varun Kanade, Frederik Mallmann-Trenn, and Claire Mathieu. 2019. “Hierarchical Clustering: Objective Functions and Algorithms.” Journal of the ACM (JACM) 66 (4): 1–42.

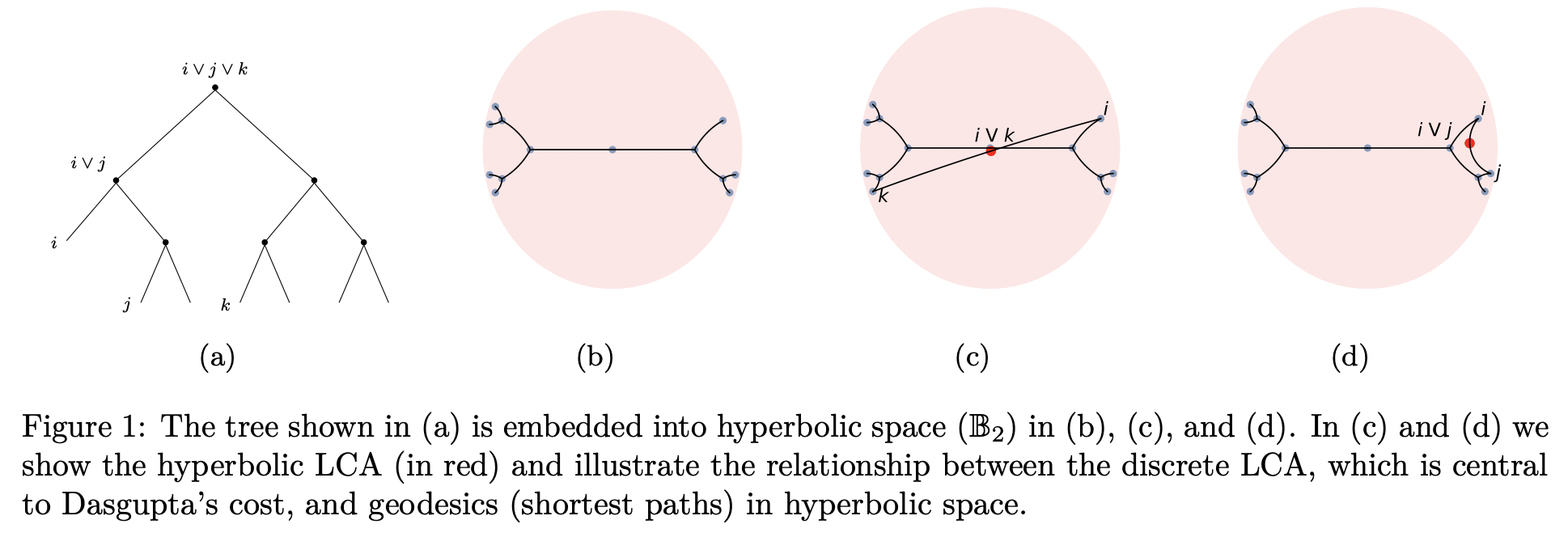

Dasgupta, Sanjoy. 2016. “A Cost Function for Similarity-Based Hierarchical Clustering.” In Proceedings of the Forty-Eighth Annual ACM Symposium on Theory of Computing, 118–27.

Le, Tam, Makoto Yamada, Kenji Fukumizu, and Marco Cuturi. 2019. “Tree-Sliced Variants of Wasserstein Distances.” In Proceedings of the 33rd International Conference on Neural Information Processing Systems, 12304–15.

Lin, Ya-Wei Eileen, Ronald R Coifman, Gal Mishne, and Ronen Talmon. 2023. “Hyperbolic Diffusion Embedding and Distance for Hierarchical Representation Learning.” arXiv Preprint arXiv:2305.18962.

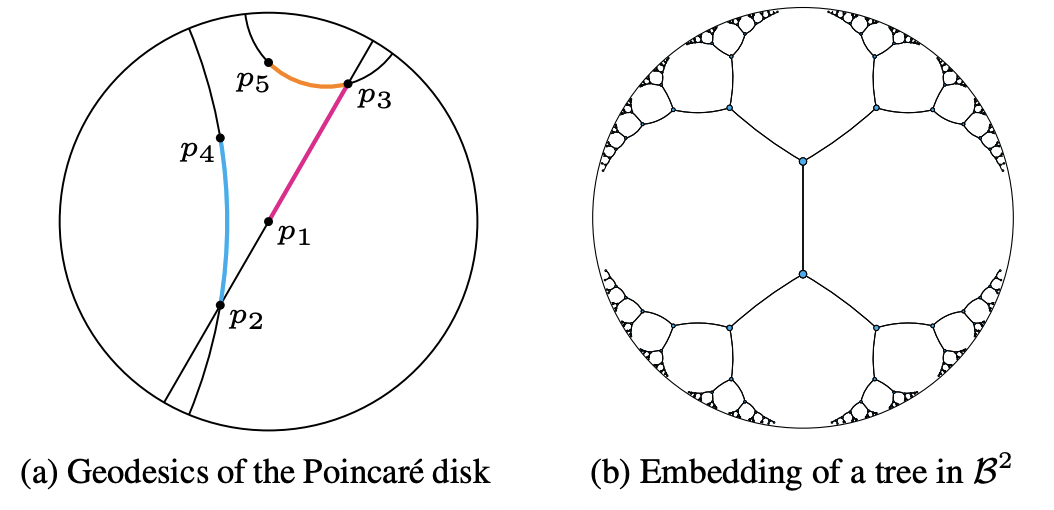

Nickel, Maximilian, and Douwe Kiela. 2017. “Poincaré Embeddings for Learning Hierarchical Representations.” In Proceedings of the 31st International Conference on Neural Information Processing Systems, 6341–50.

Nickel, Maximillian, and Douwe Kiela. 2018. “Learning Continuous Hierarchies in the Lorentz Model of Hyperbolic Geometry.” In International Conference on Machine Learning, 3779–88. PMLR.

Sala, Frederic, Chris De Sa, Albert Gu, and Christopher Ré. 2018. “Representation Tradeoffs for Hyperbolic Embeddings.” In International Conference on Machine Learning, 4460–69. PMLR.

Sonthalia, Rishi, and Anna Gilbert. 2020. “Tree! I Am No Tree! I Am a Low Dimensional Hyperbolic Embedding.” Advances in Neural Information Processing Systems 33: 845–56.