Ali, Alnur, and Ryan J Tibshirani. 2019. “The Generalized Lasso Problem and Uniqueness.” Electronic Journal of Statistics 13: 2307–47.

Eraslan, Gökcen, Eugene Drokhlyansky, Shankara Anand, Evgenij Fiskin, Ayshwarya Subramanian, Michal Slyper, Jiali Wang, et al. 2022. “Single-Nucleus Cross-Tissue Molecular Reference Maps Toward Understanding Disease Gene Function.” Science 376 (6594): eabl4290.

Hou, Wenpin, Zhicheng Ji, Hongkai Ji, and Stephanie C Hicks. 2020. “A Systematic Evaluation of Single-Cell RNA-Sequencing Imputation Methods.” Genome Biology 21: 1–30.

Kiryo, Ryuichi, Gang Niu, Marthinus C Du Plessis, and Masashi Sugiyama. 2017. “Positive-Unlabeled Learning with Non-Negative Risk Estimator.” Advances in Neural Information Processing Systems 30.

Klicpera, Johannes, Aleksandar Bojchevski, and Stephan Günnemann. 2019. “Combining Neural Networks with Personalized Pagerank for Classification on Graphs.” In International Conference on Learning Representations.

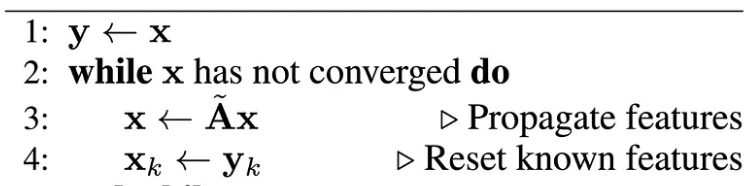

Rossi, Emanuele, Henry Kenlay, Maria I Gorinova, Benjamin Paul Chamberlain, Xiaowen Dong, and Michael M Bronstein. 2022. “On the Unreasonable Effectiveness of Feature Propagation in Learning on Graphs with Missing Node Features.” In Learning on Graphs Conference, 11–11. PMLR.

Tibshirani, Ryan J, and Jonathan Taylor. 2011. “The Solution Path of the Generalized Lasso.” The Annals of Statistics, 1335–71.

Wang, Yu-Xiang, James Sharpnack, Alex Smola, and Ryan Tibshirani. 2015. “Trend Filtering on Graphs.” In Artificial Intelligence and Statistics, 1042–50. PMLR.

Zhou, Dengyong, Olivier Bousquet, Thomas Lal, Jason Weston, and Bernhard Schölkopf. 2003.

“Learning with Local and Global Consistency.” In

Advances in Neural Information Processing Systems, edited by S. Thrun, L. Saul, and B. Schölkopf. Vol. 16. MIT Press.

https://proceedings.neurips.cc/paper/2003/file/87682805257e619d49b8e0dfdc14affa-Paper.pdf.

Zhou, Dengyong, Jason Weston, Arthur Gretton, Olivier Bousquet, and Bernhard Schölkopf. 2003. “Ranking on Data Manifolds.” Advances in Neural Information Processing Systems 16.